An alternative derivation of Shannon entropy.

Shannon’s seminal paper “A Mathematical Theory of Communication” introduced the concept of entropy as a measure of information. The paper is a cornerstone in the field of information theory, and it has had a profound impact on various scientific disciplines. The concept of entropy is widely used in physics, computer science, and statistics, among others. In this blog post, I would like to present an alternative derivation of Shannon entropy based on the advancements in coding theory.

This derivation, among others, will be included in my book “Data Compression in Depth”. The book is a comprehensive guide to data compression, covering:

- Theoretical foundations: probability theory, information theory, asymptotic equipartition theorem, etc.

- Practical applications: the book presents a variety of algorithms and techniques alongside example implementations in the ISO C99 programming language.

- Latest advancements in the field like the Asymmetric Numeral Systems (ANS).

If you are interested in the book, make sure to follow my website for updates - I also maintain a RSS feed for the newest blog posts.

Preliminaries⌗

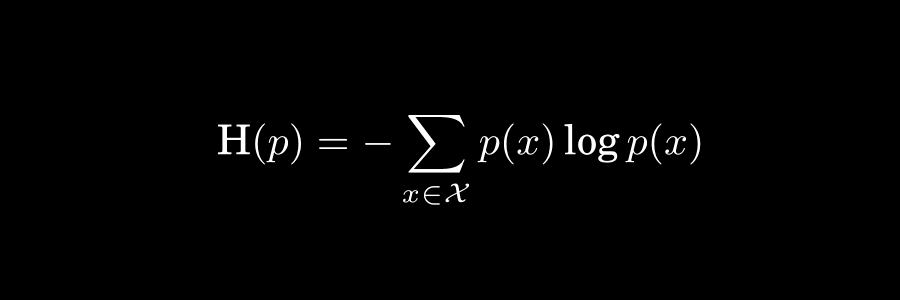

We introduce the Shannon entropy of a message ensemble as follows:

where is the set of possible values of , and is the probability mass function of . The logarithm is generally taken to base 2, and the entropy is measured in bits. An optimal code assigns codeword lengths to the values of such that the average codeword length is precisely the entropy of the message ensemble. Hence, for all optimal codes, the following holds:

The Kraft-McMillan inequality states that, given an uniquely decodable code with codeword lengths , the following holds:

If the inequality is satisfied with equality, then the code is complete (i.e. adding a new codeword and associating it with a symbol would make the code no longer uniquely decodable). The Kraft-McMillan inequality is a necessary and sufficient condition for the existence of a uniquely decodable code.

The derivation⌗

Suppose that we do not know the formula for . We wish to minimise the expected codeword length , while satisfying the Kraft-McMillan inequality. We consider the constrained minimisation via Lagrange multipliers:

Consequently,

Solving for yields:

We come back to the Kraft-McMillan constraint and simplify via properties of PMFs:

In order to request a complete code, we solve for , which yields . Substituting back yields:

Taking the logarithm of both sides and multiplying by yields:

The quantity is frequently called the self-information of , and it measures the amount of information in bits that is gained when the value is observed. Such a choice of non-integer codeword lengths yields an optimal code, the average length of which is:

Hence, we have proven that the theoretical limit in compression of memoryless IID sources is the Shannon entropy.

Non-integer codeword lengths⌗

Of course, it is not exactly feasible to build e.g. a Huffman codebook that assigns one and a half bits to a symbol. Merely rounding the codeword lengths to a integer will not necessarily yield an optimal code. It is easy to see that it would be the case if the input followed a dyadic distribution (i.e. the probabilities are of the form ). On the other hand, the upper bound of the Shannon coding (assigning codewords of lengths equal to rounded self-information) of is attained by noticing and thus . A way to assign integer code lengths to symbols such that under this constraint the code is optimal is attained by Huffman coding.

Algorithms that can assign a fractional amount of bits to a symbol are considered to be making use of fractional bits, like ANS or arithmetic coding. This technique allows for the construction of codes that are arbitrarily close to the Shannon limit. Practically, the integer implementations of arithmetic coding is not optimal, but still more efficient (CR-wise) than Huffman coding.