Finding roots of a polynomial - numerical methods in APL

Finding the roots of a polynomial can be very helpful if one aims to factorise it. Sometimes, solving a polynomial equation can yield useful mathematical quantities. For instance, finding the roots of the characteristic polynomial of a matrix will yield its eigenvalues.

The Newton-Raphson and Secant method⌗

The Newton-Raphson method is probably the most popular approach to numerically approximating roots. I decided against using it for a few reasons. Since the function I’m working with is a blackbox function, I’d need to take the derivative in point of it. It’s something that I’ve covered in the past, but I can do something better (and more interesting!) this time. The Secant method is more practical and realistic for my use case. It can be thought of as a finite-difference approximation of Newton’s method, which in the end is all numerical methods are capable of.

Unsurprisingly, the secant method just like the Newton’s method can be described as a recurrence relation:

Which follows from a finite difference approximation:

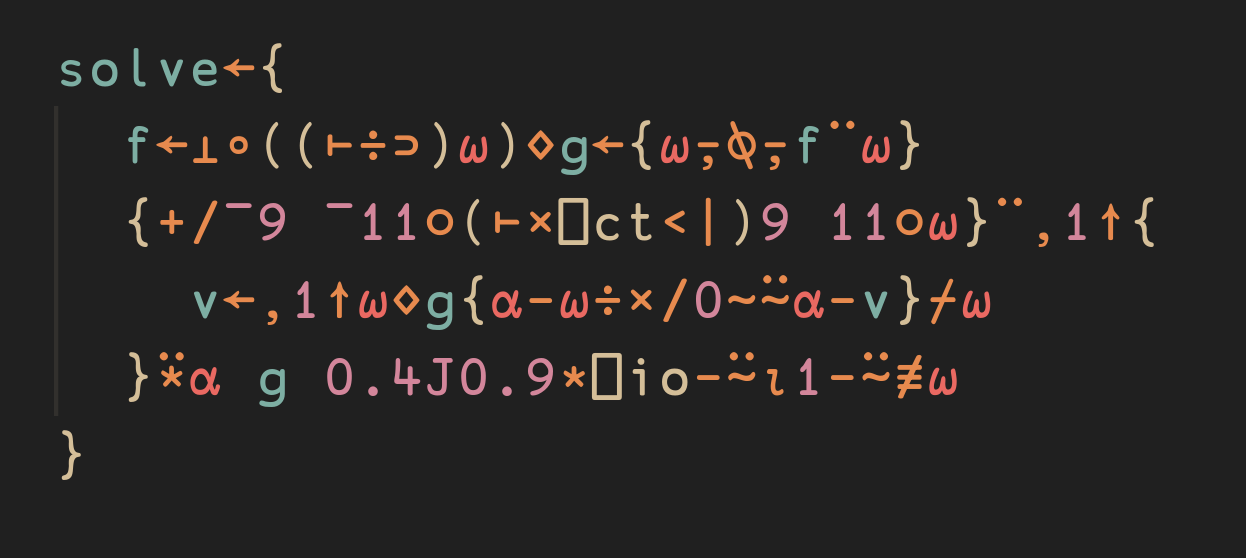

To demonstrate the Secant method using APL, I will take a simple function and try to find one of its roots. Starting with , using the above formula verbatim, I arrived at the following operator:

The code assumes that and . There isn’t much to unpack - the vector v is just and , so consequently g is . The train below is a bit confusing, but ⊢÷⍥(-/)f¨ is equivalent to (-/⊢)÷(-/f¨), which simply divides the difference and .

With 10 iterations of the algorithm, I arrive at the following:

… which appears to indeed be one of the roots. But that’s not what I’m really after!

The Weierstrass method⌗

The Weierstrass method can be used to numerically find the solutions to an equation . There are a few good things about it, but the most important one is that it finds all the roots of the polynomial, no matter if they’re complex or real, and allows us to completely factorise the polynomial - simply speaking, turn it into a product of polynomials of first degree.

We can demonstrate the Weierstrass method on a simple polynomial:

We can rewrite as a product, as mentioned earlier.

The next steps are very similar to the Newton’s method. For sufficiently big , , and . We pick , and as next powers (starting with the -th power) of a complex number which is neither real nor a de Moivre number. The formulae follow:

While the usual Weierstrass method attempts to use in definitions of and , my modified version of it decides against it to simplify the implementation at the cost of precision thus having to perform more iterations of the algorithm.

The implementation⌗

I start my implementation of the Weierstrass algorithm by creating a function that evaluates the polynomial at a point. Since the Weierstrass algorithm requires the largest power of to have the coefficient , I adjust for that:

Since the algorithm will juggle around the values of and in a table, I add a function that will build it from given values of :

Before the first iteration of the algorithm, I create a table of , , …, with the starting values equal to the next powers of 0.4J0.9.

Since the algorithm iteration will take the table as the input, I’m interested only in the first row ( values), since the second row will always ideally be zero. Additionally, I’d like to round the results a little, preferably according to the comparison tolerance, so that very small real/imaginary values are truncated (since they’re most certainly insignificant). Additionally, the iteration function will be executed as many times as ⍺ which purposefully doesn’t have a default value:

Now, for the most difficult part - the iteration function. I start by extracting the current values of zeroes:

Then, I fold the matrix with the single iteration function and build a table out of it’s results:

The function is given the and values as ⍺ and ⍵:

The correspondence between the iteration function and the formula above is obvious. As a little trick, to avoid having to remove the current zero from the v matrix, I simply remove the zeroes from the result of subtraction. Then, the product of them divides the value of and this all is subtracted from the current value.

That’s a lot to unpack, but let’s test the implementation now. As a small spoiler for my future blog post, where I’ll be explaining eigenvalues, determinants and inverse matrices using the Faddeev-LeVerrier algorithm, I will compute the characteristic polynomial of a simple matrix:

Since eigenvalues are just the roots of the characteristic polynomial, I attempt finding them using my new toy:

Let’s verify the eigenvalues I obtained using pencil and paper calculations. From the definition of the eigenvector corresponding to the eigenvalue :

I also know that:

Still, by definition, the equation has a nonzero solution if and only if:

Next up, I get the following:

… which is the characteristic polynomial obtained earlier. Continuing:

It is obvious that the first root is 10, while the other two are solutions to . Using the standard method of obtaining roots of a quadratic polynomial, I conclude that the other two roots are (unsurprisingly) conjugates of each other and equal to and . Finding the eigenvectors (which is out of scope of the current post) is left as an exercise to the reader.

Summary⌗

The current, third post of my “in APL” series demonstrates how beautifully and naturally certain mathematical concepts can be expressed using APL. The full source code for this blog post follows: