Implementing the Faddeev-LeVerrier algorithm in APL

The Faddeev-LeVerrier algorithm is one of my favourite algorithms related to linear algebra for a variety of reasons:

- It’s incredibly elegant

- It computes many things at once - the trace of a matrix, its determinant, inverse and the characteristic polynomial (and thus, its eigenvalues).

- The task of computing the characteristic polynomial usually requires the use of symbolic computation - one needs to compute to get a function of , while the Faddeev-LeVerrier algorithm can do it numerically.

Initial considerations⌗

The objective of the Faddeev-LeVerrier algorithm is to compute coefficients of the characteristic polynomial of a square matrix.

The first observation to be made is that and . The algorithm states:

A few observations assuming a by matrix :

- .

- The trace of is equal to .

- is equal to (a consequence of the previous observations about ).

- is equal to .

An implementation⌗

I start my implementation by asserting a few things about the environment and the input:

I’m interested only in matrices (arrays of rank 2 - 2=≢⍴⍵) and I specifically want them square -=/⍴⍵. If the conditions aren’t met, the algorithm returns an empty coefficient vector.

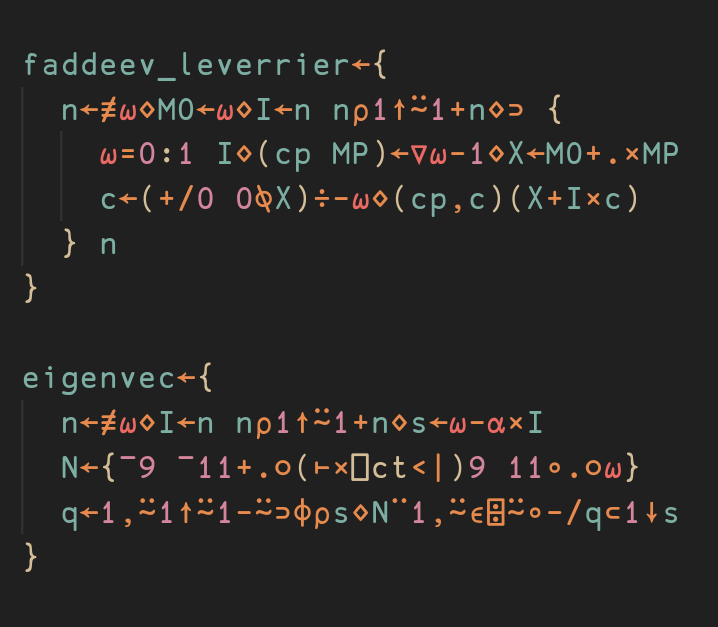

Next up, I define n as the number of rows/columns of the input matrix, M0 as the starting matrix for the algorithm, and I as an identity matrix of order n. The actual recursive implementation of the algorithm will return a vector of the characteristic polynomial coefficients and the matrix corresponding to the current iteration. Since the matrix corresponding to the last iteration is simply n n⍴0 (per observation 1), I will discard it:

I have also added a guard for the final iteration, which simply returns the identity matrix and 1 as the coefficient.

I recursively call the function asking for the pair of and the corresponding c value. I also multiply matrices M0 (the input matrix) and MP (the previous matrix), as it’ll prove itself useful in upcoming computations, first one of them being the computation of c for the current iteration. For this purpose, I take the trace of X and divide it by the negated iteration index. The dyadic transposition (axis rearrangement) 0 0⍉X yields the leading diagonal of X, while +/ sums it.

I am one step away from the final version of the algorithm, the only thing left is returning the result tuple:

The computation of the current M matrix is derived verbatim from the second equation of the algorithm.

Applications⌗

I will combine today’s post with the yesterday’s one about polynomial roots. Namely, the yesterday’s post already contains an example use of my faddeev_leverrier function. Let’s do something different today.

With a few not so beautiful hacks on top of my existing function, I can compute all the properties of my test matrix:

The function will return a vector of:

- inverse matrix

- trace

- characteristic polynomial

- determinant

The results appear to match my pencil and paper calculations (not presented in this blog post - as an exercise, feel free to compute these properties of my test matrix yourself). Of course, there is a way to compute the remaining properties besides the characteristic polynomial using more idiomatic APL:

The results appear to be the same:

To my surprise, though, it appears that the Faddeev-LeVerrier algorithm is faster for this particular test case! The benchmark data:

Replacing the generalised alternant with a (hopefully) more performant determinant function yields oddly similar results:

Eigenvectors⌗

The final part of my blog post will shed some more light on eigenvectors. Let’s compute the eigenvalues first:

For simplicity, I will present the pencil and paper calculations for alongside the APL code. I start with computing :

yields a homogenous equation system which needs to be solved. A very sad property of this system is that it’s underspecified, so up to are all functions of . Surprisingly, that’s not a problem, though! If we ignore the presence of , turns out one row of the matrix is redundant. If we assume that , in our example, we solve a non-homogenous system of equations with two variables.

Of course, one could scale as they please, and modify and accordingly. I also use the same real/imaginary truncation technique as outlined in the previous post to strip (likely) unnecessary real/imaginary parts. Finally, I test my code:

The results seem to match. Great!

Summary⌗

I believe that today’s post was much more involved than the previous ones. The full source code follows: