A Survey of Results on the Blum-Blum-Shub Pseudorandom Number Generator

Introduction.⌗

Blum-Blum-Shub (henceforth referred to as BBS) is a pseudorandom number generator uniquely based on the difficulty of factoring large composite numbers. It was introduced by Lenore Blum, Manuel Blum, and Michael Shub in 1986. It is notable for its so-called provable security under certain assumptions, luring many cryptographers to study it.

Let where and are two distinct large primes. The internal state step function is given by:

With being a random seed coprime to and greater than one. The following requirements are placed on and :

- They should be Sophie Germain primes, i.e. both and are also prime.

- They should be congruent to 3 modulo 4, i.e. and .

- should be as small as possible, ideally 1.

The random bits are extracted from the internal state at each step. The amount of bits extracted provides a trade-off between speed and security. The most common extraction method is to take the least significant bit of the internal state, but more bits can be extracted at once.

Quadratic residues.⌗

We will start with some preliminary definitions and theorems concerning number-theoretic basis of BBS.

Definition 1. An integer is called a quadratic residue modulo if there exists some such that .

Definition 2. The Jacobi symbol is defined as follows:

Definition 3. Consider like in the BBS generator. is then partitioned into two sets of equal cardinality - and where . Logically, none of the elements in are quadratic residues modulo and exactly half of the elements in are quadratic residues modulo .

Definition 4. A solution to the quadratic residuosity problem (QRP) decides whether some is a quadratic residue modulo .

Theorem 5. An unproven but considered by researchers highly likely assumption is that any PPT (probabilistic polynomial-time) algorithm that solves the QRP has the probability of success at most , where is small and in .

Many known partial solutions to the QRP orbit around prime factorisation of . In particular, quadratic residuosity is efficient to check for prime , and a number is a quadratic residue modulo if it’s a quadratic residue modulo every prime factor of . The practical implication of this is that the factorisation of can not be known by an adversary. More generally, finding hard to factor semiprimes is a well-known problem in RSA.

This leads many researchers to believe that QRP and prime factorisation are mostly interchangeable in that efficient factorisation of implies efficient solution to the QRP. Indeed, the most efficient known algorithm for solving the QRP is based on factorisation, but formally speaking an efficient solution to the QRP does not need to imply efficient factorisation of .

Digging deeper, there is also no proof of the statement that factorization is hard - albeit millenia of research have not yet come up with an efficient factorization method, so it must not be trivial. Accordingly, the security status of BBS is similar to that of RSA.

Circling back.⌗

Let’s discuss how this connects to BBS.

In the specific case of BBS, we have concrete evidence that the assumption of hardness of QRP can be equivalently replaced by the assumption of hardness of factorisation. This was proven by W. B. Alexi, B. Chor, O. Goldreich, and C. P. Schnorr. RSA/Rabin functions: certain parts are as hard as the whole. in SIAM J. Computing, 17(2):194-209, April 1988.

The reduction in Blum, Blum, Shub (1986) proceeds by demonstrating that, if there is a PPT algorithm that operates backwards on the BBS generator (i.e. after observing some consecutive outputs of the generator, it can predict the output preceding them) with a specific probability, then there is also a PPT algorithm that solves the QRP with the same probability.

The original construction assumes that we extract the lowest order bit of the internal state at each step, but it can be generalized to extract more bits at once. Specifically, U.V. Vazirani and V.V. Vazirani, Efficient And Secure Pseudo-Random Number Generation in 25th Annual Symposium on Foundations of Computer Science, 1984, showed that we can securely extract bits at each step.

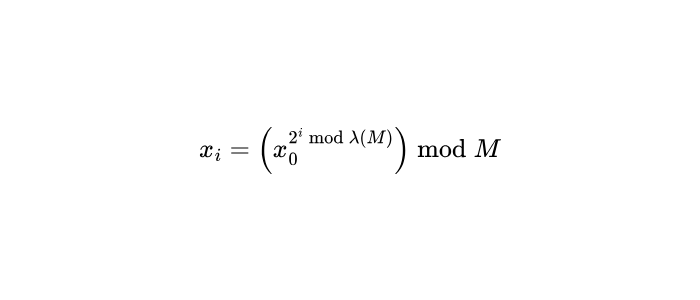

The generator can be seeked around to any position using Carmichael’s function of M, which degenerates to via Euler’s theorem, stating that

A concrete (non-asymptotic) proof of correctness is given by A. Sidorenko and B. Schoenmakers, Concrete Security of the Blum-Blum-Shub Pseudorandom Generator. Cryptography and Coding. They claim:

Theorem. Suppose the BBS pseudorandom generator extracting bits at a time is not -secure. Then there exists an algorithm that factors the modulus in expected time

where .

Moving on to factoring, the most efficient known algorithm is the General Number Field Sieve (GNFS), which has a sub-exponential complexity proportional to:

The authors thus approximate the number of clock cycles required to factor an -bit integer as:

Under the assumption that no algorithm can factor an -bit Blum prime in less than clock cycles, the BBS generator is -secure if:

where .

The paper demonstrates a practical application of this result: If we take outputs, bound to clock cycles and . Then, the recommended amount of bits of security is , while the amount of bits extracted at each step is .

Another result is given by S. Chatterjee, A. Menezes, and P. Sarkar Another Look at Tightness, where they demonstrate that some common exceedingly-liberal choices of and (in that case and ) lead to lack of tangible security and dismantle the proof. This is however due to the fact that is way too large, and the double-log bound for is at most or .

Security implications.⌗

It is generally assumed that and (and thus ) remain secret. Further, it is assumed that is secret and similar in magnitude to .

If and become public, we can create a seekable generator per the formula from the introductory section, as the Carmichael function of can now be feasibly computed. Further, we can approximate the period of the generator and produce a suitable distinguisher.

On the other hand, retaining and offers a performance improvement on the side of a legitimate generator. In this case, we observe that finding roots modulo , in general, is difficult. However, if and are known, we can compute the roots modulo and separately, and then combine them using the Chinese Remainder Theorem, resulting in three -bit multiplications rather than a -bit multiplication.

For a concrete exposition of how to do this, consider:

These squarings are faster because and are each roughly half the bit-length of .

We reconstruct using:

Then, the CRT gives:

Specifically, and can be cached as they are constant for a given . The computation usually proceeds via the EEA.

Toy experiments.⌗

We can begin by taking the two primes for granted and subsequently computing .

Then, we can precompute the CRT constants, which is particularly easy in Python:

C implementation.⌗

A fully-featured C implementation of the BBS generator is more involved. In this article we will primarily cover the cbbs-rng implementation produced by the author of this article.

The generator supports OpenMP parallelisation for faster generation of the starting state. Some preliminary feature detection code follows:

Most importantly, we use the new C23 feature - _BitInt - to define bit integers of arbitrary size. This allows us to work with large numbers without worrying about overflow or performance penalty as accurred with gmp. The values of , and must come from a cryptographically secure source, such as /dev/urandom on Linux or CryptGenRandom on Windows:

For primality testing we use generally use the Miller-Rabin test. However, for the sake of performance, we will perform some rudimentary trial division first to weed out the obvious non-primes. This is however not as simple as it may seem: trial divisions of large numbers are excruciatingly slow.

In the cbbs-rng implementation, we use a common trick in cryptography and data compression called the Barrett Reduction. Long story short, we can replace a division by a multiplication with a shift. For pre-generating the table of small primes, we use the Sieve of Atkin.

The Miller-Rabin test is then implemented as follows with a variable amount of iterations:

Nominally, the inner loop computing could also use Barrett reductions for improved performance, but the author observed that it is not particularly beneficial in practice.

All these components build up to the main part of our BBS generator which computes the parameters and under the condition that they are Sophie Germain primes and Blum integers.

The function will be necessary to compute the period of the generator. An efficient algorithm for that is given by Stein and implemented below:

Finally, the state of the BBS generator itself is represented, initialised and seeked as follows:

Simply advancing the generator is performed by the following procedures:

A parallel version of the generator can be implemented to slightly alleviate its slowness:

Via the CLI stub we can both do some rudimentary checks and generate some pseudo-random numbers. Alternatively, we can also test the output so that it can be fed into a statistical test suite such as the NIST SP 800-22 or Diehard tests:

Miscellaneous results.⌗

-

The period of the BBS generator is strictly tied to the Carmichael function. BBS is ultimately periodic with period . Hence minimising is crucial for security, as it maximises , which in turn tends to maximise .

-

The existence of one-way functions is equivalent to the existence of pseudorandom generators that pass all polynomial-time statistical tests. The proof of this is given by J. Hastad, R. Impagliazzo, L. Levin, and M. Luby. A Pseudorandom Generator from any One-way Function in SIAM J. Computing, 28(4):1364-1396, April 1999.

-

The so-called proof of security is rather misleading - its practical consequences are not as significant as it may seem. Most importantly, dated literature about BBS tends to underestimate the magnitude of the parameters to the generator. The magnitude of 6800 bits in the modulus results in extremely slow generation of pseudo-random numbers.

-

The generator is very slow. For in parallel mode, we generate only about 2.65MB/s of random data. yields a more depressing figure of 721.6kB/s. Predictably, the functions

__divmodbitint4and__mulbitint3constitute the majority of the runtime (>95%) with an equal proportion between them. Future improvements could involve the use of the Chinese Remainder Theorem for faster computation. Operation in the Montgomery domain could also be evaluated. The author hopes that future releases of GCC ship a__mulbitint3function that is not quadratic but perhaps uses Karatsuba or such. -

An simple implementation using GMP (and thus is not constant-time, and is more vulnerable to side-channel attacks) put together by the author at hand can accomplish the rate of about 3.1MB/s for . The author is interested in investigating this as a future project and specialising the parts of the GMP responsible for the speedup for the BBS generator.